There are a lot of threads around asking, “Is cloud computing less expensive than on-premise?” The short answer is: it depends. Here’s a quick outline of the why the better question is which provides more value.

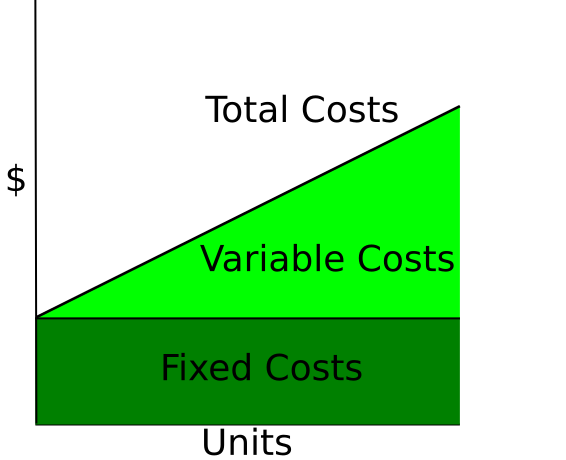

In economics, there are two types of costs in production: fixed costs and variable costs. Fixed costs are independent of the level of production. Buying a server is a fixed cost independent of the number of VMs run on that server. Variable costs are the per unit costs of production. The cost of applying operating system patches is a variable cost, it is dependent upon the number of VMs. Investing in fixed costs, servers supporting higher VM density, creates an economy of scale by reducing the variable cost of providing another OS instance to your customers. This is the production side of cloud economics.

In economics, there are two types of costs in production: fixed costs and variable costs. Fixed costs are independent of the level of production. Buying a server is a fixed cost independent of the number of VMs run on that server. Variable costs are the per unit costs of production. The cost of applying operating system patches is a variable cost, it is dependent upon the number of VMs. Investing in fixed costs, servers supporting higher VM density, creates an economy of scale by reducing the variable cost of providing another OS instance to your customers. This is the production side of cloud economics.

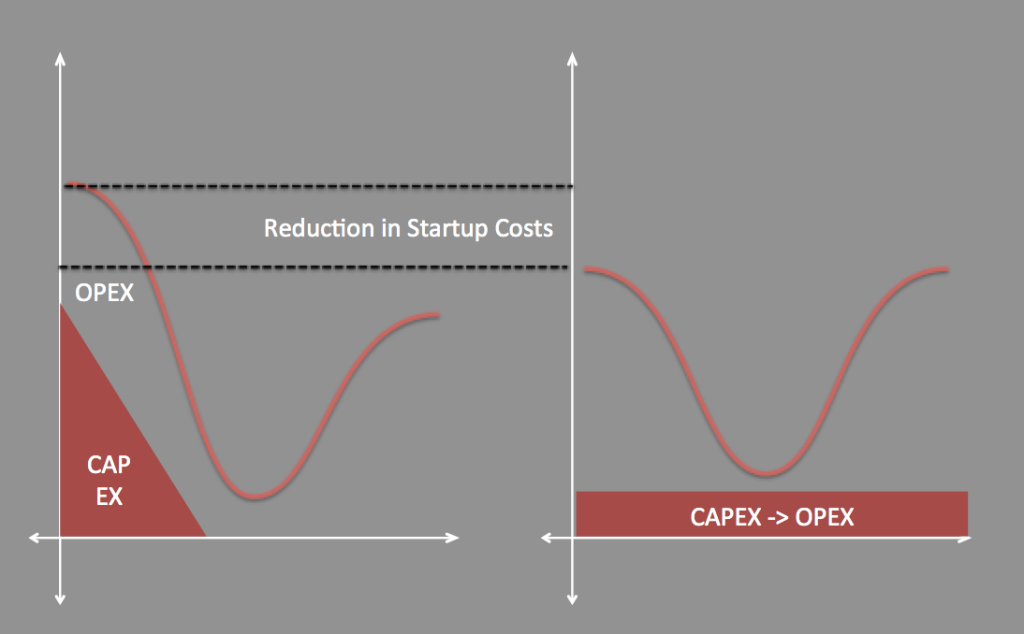

On the consumption side, moving to cloud changes the fixed Capital Expense cost of hardware to a variable Operating Expense cost. Tying the cost of the infrastructure to the lifetime of the project reduces upfront costs.

Spreading the costs over the life of the project does increase the terminal costs of the project, however this is not the problem it seems. With the cloud model, it is also simpler close down when the project is no longer providing the necessary value.

Combining the lower upfront costs and risk incurred of starting a new project by spreading the cost across the life of the project, it is easier and lower cost to begin more new projects. With the simplicity of exiting unprofitable projects, these new projects carry less organizational risk by lowering incurred legacy. More projects, at lower risk = more value creation, faster. Or, as we like to say in marketing: value creation through innovation.